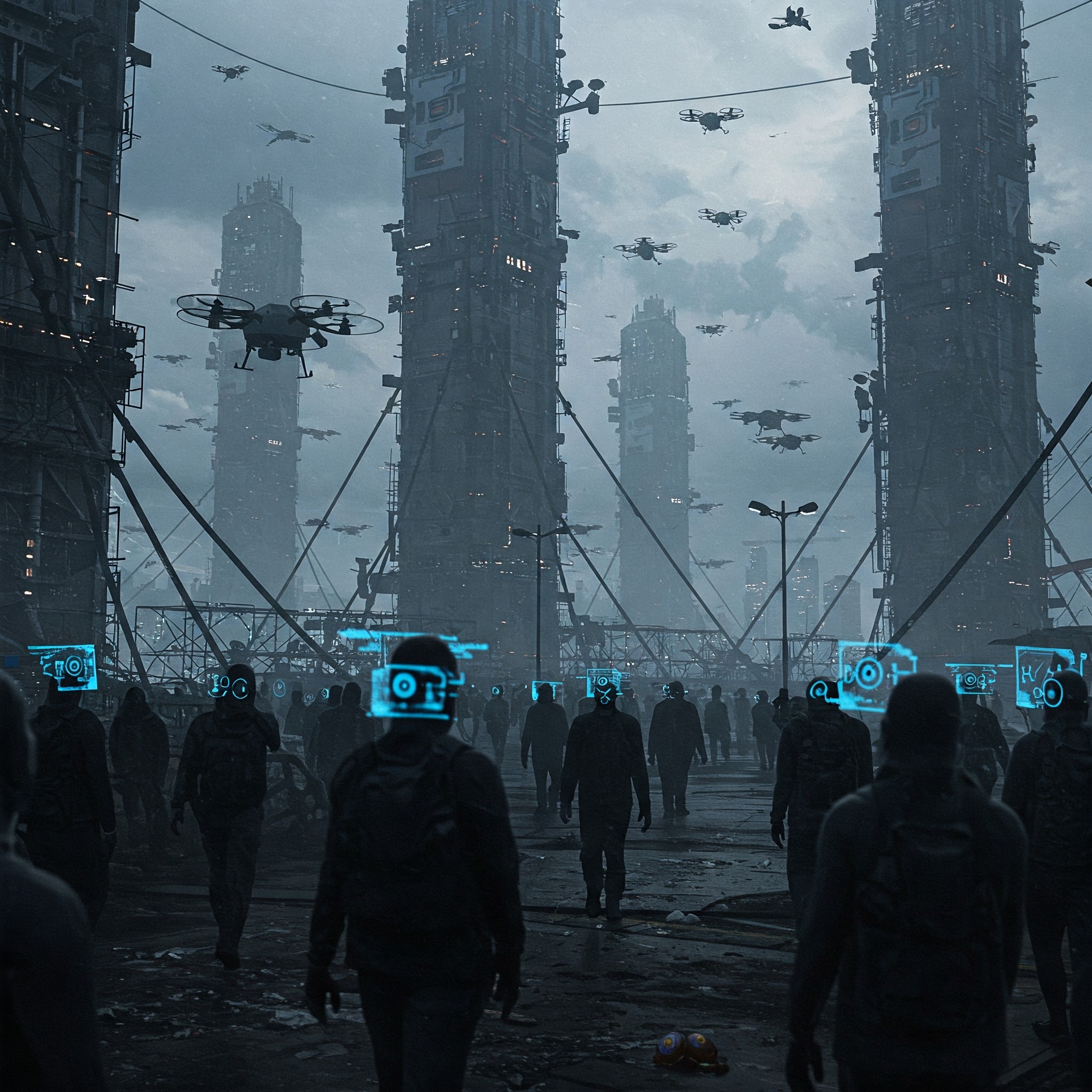

Facial recognition and data-mining AI technologies pose significant threats to personal privacy and freedom, raising ethical, legal, and societal concerns. Here’s how they contribute to privacy invasion and mass surveillance:

1. Mass Surveillance & Loss of Anonymity

Government & Law Enforcement Overreach: Many governments use facial recognition for public surveillance, tracking citizens without consent. This enables authoritarian control, stifles dissent, and chills free expression.

Public Space Monitoring: Cameras with AI-powered facial recognition in streets, airports, and stores erase anonymity, turning society into a panopticon where every move is logged.

2. Corporate Data Exploitation

Social Media & Advertising: Companies like Meta (Facebook), Google, and TikTok harvest facial data to build hyper-targeted profiles, manipulating behavior and preferences.

Retail & Banking Surveillance: Stores use facial recognition to track shoppers, while banks employ AI-driven identity verification, often without transparency.

3. Bias & Discrimination

Racial & Gender Bias: Studies show facial recognition is less accurate for women and people of color, leading to wrongful arrests (e.g., cases in the U.S. where Black men were falsely identified).

Predictive Policing: AI-driven surveillance disproportionately targets marginalized communities, reinforcing systemic discrimination.

4. Lack of Consent & Legal Protections

Covert Data Collection: Many people are unaware they’re being scanned or profiled, violating the principle of informed consent.

Weak Regulations: Laws like GDPR (EU) and CCPA (California) provide some protections, but enforcement is inconsistent, and many countries lack strong privacy laws.

5. Chilling Effects on Freedom

Self-Censorship: Knowing you’re being watched alters behavior, discouraging activism, free speech, and dissent.

Social Credit Systems: Countries like China use AI surveillance to enforce compliance, restricting freedoms based on behavior scores.

6. Cybersecurity Risks

Data Breaches: Stored facial recognition data is a goldmine for hackers, leading to identity theft and fraud.

Deepfake Misuse: AI can manipulate facial data to create fake videos, enabling blackmail or disinformation campaigns.

How to Resist & Protect Privacy

Demand Stronger Laws: Push for bans or strict regulations on facial recognition (e.g., San Francisco’s partial ban).

Use Privacy Tools: Encrypted messaging (Signal), VPNs, and anti-facial recognition clothing/glasses can help evade tracking.

Support Ethical AI: Advocate for transparent, bias-free AI development with public oversight.

Powered by Froala Editor

Parenting

Dependence on AI & Loss of Human Skills

Artificial Intelligence (AI)

AI in Cybersecurity: Hacking & Cybercrime

Artificial Intelligence (AI)

Existential Risk & Superintelligent AI

Artificial Intelligence (AI)

AI Manipulation & Behavioral Control