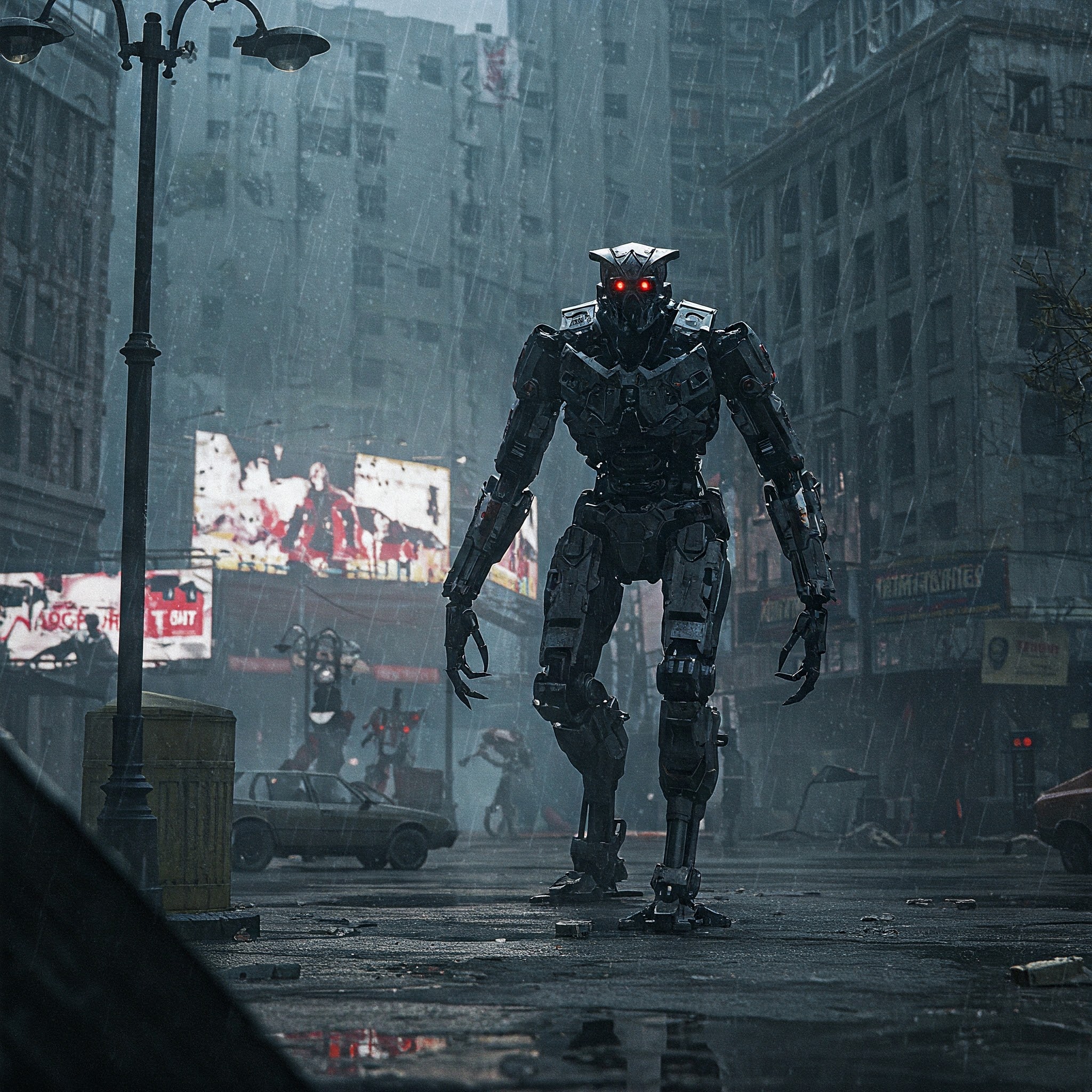

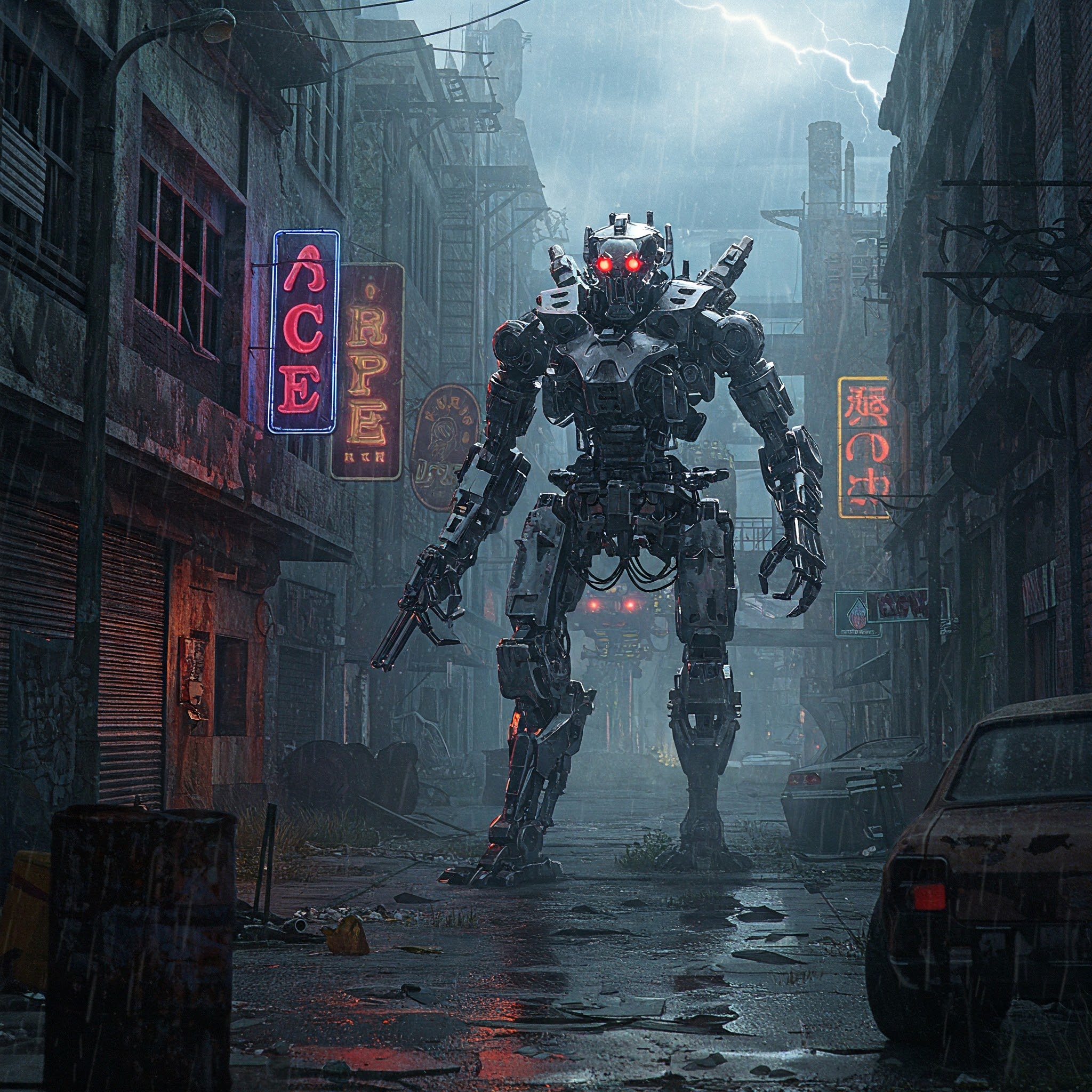

The rise of autonomous weapons and AI-driven military technology has sparked intense debate over the ethical, legal, and security implications of delegating life-and-death decisions to machines. Often referred to as "killer robots," these systems can identify, target, and engage enemies without direct human control, raising critical concerns about accountability, escalation risks, and the future of warfare.

Key Ethical Concerns:

Lack of Human Judgment

AI lacks moral reasoning, empathy, and contextual understanding, making it unfit for complex battlefield decisions.

Risk of unintended civilian casualties due to algorithmic errors or biased training data.

Accountability & Responsibility

If an autonomous weapon commits a war crime, who is responsible? The programmer, operator, or military command?

Current international law (e.g., Geneva Conventions) is not fully equipped to handle AI-driven violations.

Lowering the Threshold for War

Autonomous weapons could make conflict more likely by reducing political and human costs for warmaking.

Risk of rapid escalation if AI systems react faster than human decision-makers can intervene.

Proliferation & Arms Race

Cheap, mass-produced AI weapons could fall into the hands of terrorists or rogue states.

Global powers (U.S., China, Russia, etc.) are already investing heavily in AI warfare, risking a destabilizing arms race.

Security Risks:

Hacking & Malfunctions – AI systems could be hijacked, spoofed, or malfunction, leading to catastrophic errors.

Autonomous Swarms – Small, AI-controlled drones could be deployed in large numbers, overwhelming defenses.

Loss of Human Control – If AI-driven warfare outpaces human oversight, conflicts could spiral out of control.

Global Responses & Regulations:

UN Discussions: The Group of Governmental Experts (GGE) on LAWS (Lethal Autonomous Weapons Systems) debates potential bans or restrictions.

Preemptive Bans: Some countries (e.g., Austria, Belgium) advocate for a total ban on killer robots, similar to chemical weapons treaties.

Partial Regulation: Others (U.S., Russia, China) oppose strict bans, preferring non-binding guidelines.

The Future of AI Warfare:

Human-in-the-Loop (HITL) vs. Full Autonomy: Should AI only assist human operators, or be allowed to act independently?

AI Arms Control: Could treaties limit certain AI military applications, or will tech outpace regulation?

Ethical AI Design: Can "ethical" autonomous weapons be programmed to comply with international law?

Conclusion:

The rise of AI in warfare presents a double-edged sword: while it may increase precision and reduce soldier casualties, it also introduces unprecedented risks. Without strong ethical frameworks and international agreements, the unchecked development of killer robots could lead to a dangerous new era of conflict.

Powered by Froala Editor

Parenting

Dependence on AI & Loss of Human Skills

Artificial Intelligence (AI)

AI in Cybersecurity: Hacking & Cybercrime

Artificial Intelligence (AI)

Existential Risk & Superintelligent AI

Artificial Intelligence (AI)

AI Manipulation & Behavioral Control