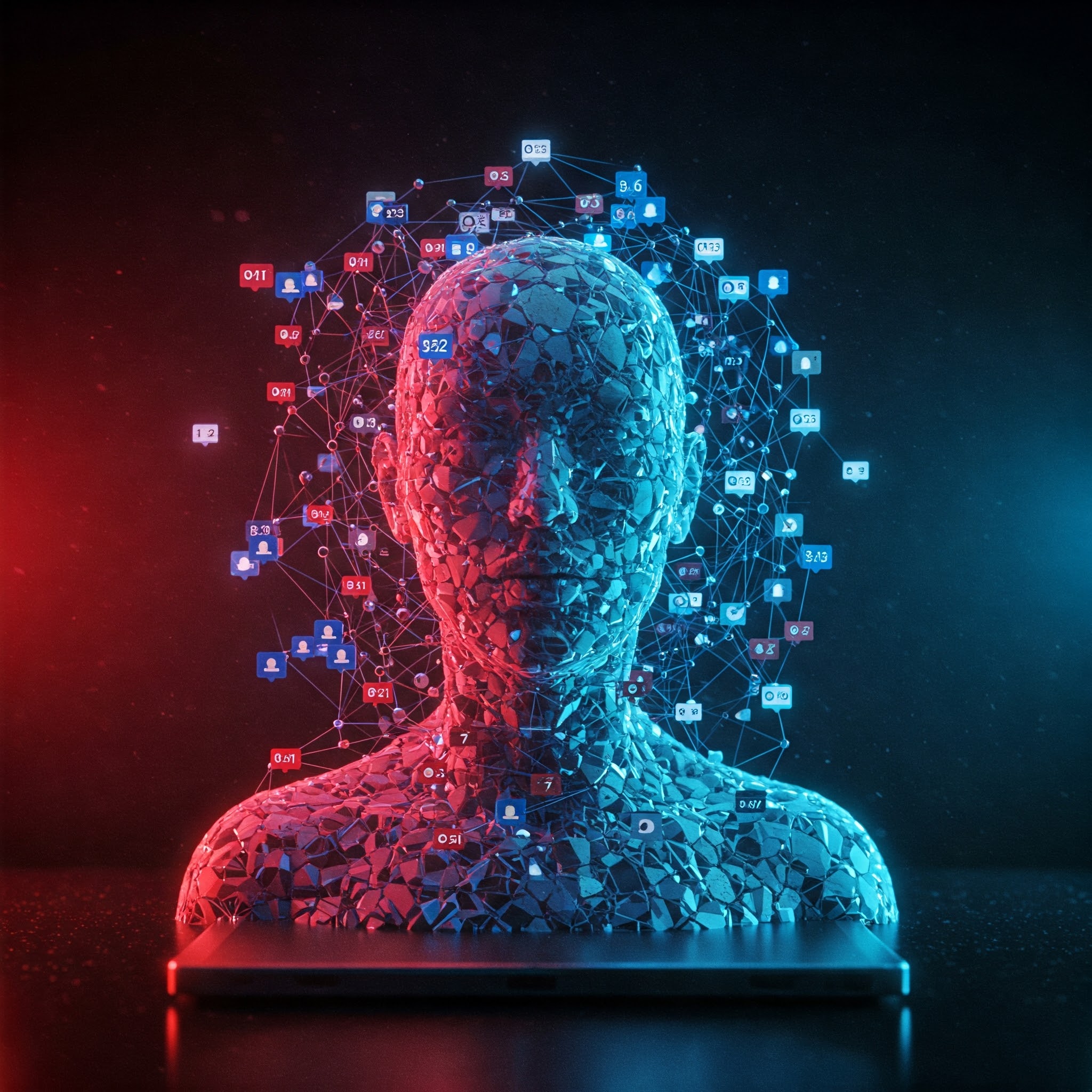

The dangers of AI-driven social media algorithms influencing opinions and fostering addiction are a growing concern in today's digitally dominated world. These algorithms, powered by advanced machine learning, are designed to maximize user engagement—often at the expense of mental health, democratic discourse, and individual autonomy. Here’s a breakdown of the key risks:

1. Opinion Manipulation & Echo Chambers

Personalized Content Bubbles: AI algorithms curate content based on user behavior, reinforcing existing beliefs and creating ideological echo chambers. This limits exposure to diverse perspectives, polarizing societies.

Disinformation & Propaganda: Bad actors exploit AI-driven recommendation systems to amplify misleading or extremist content, manipulating public opinion (e.g., election interference, conspiracy theories).

Behavioral Microtargeting: AI analyzes psychological profiles to deliver hyper-personalized ads or political messaging, subtly shaping decisions (e.g., Cambridge Analytica-style influence).

2. Addiction & Mental Health Risks

Dopamine-Driven Engagement: AI optimizes for "time spent" by exploiting psychological triggers (endless scrolling, notifications, variable rewards), leading to compulsive usage akin to gambling addiction.

Mental Health Decline: Studies link excessive social media use to anxiety, depression, and low self-esteem—especially in youth, where algorithms push harmful content (e.g., unrealistic beauty standards, self-harm).

Loss of Autonomy: Users report feeling "hooked" without conscious choice, as AI predicts and manipulates behavior more effectively than human self-control.

3. Societal & Democratic Threats

Erosion of Critical Thinking: When AI dictates information flow, people may lose the ability to evaluate sources or think independently.

Amplification of Extremism: Algorithms prioritize outrage and sensationalism, fueling radicalization and social division.

Surveillance Capitalism: AI monetizes attention by harvesting data, turning users into products while undermining privacy.

Possible Solutions & Mitigations

Algorithmic Transparency: Mandate disclosure of how AI recommendations work (e.g., EU’s Digital Services Act).

Ethical AI Design: Prioritize user well-being over engagement metrics (e.g., "time well spent" initiatives).

Media Literacy Education: Teach critical thinking to counteract manipulation.

Regulation & Oversight: Governments must curb exploitative practices (e.g., banning addictive dark patterns).

Powered by Froala Editor

Parenting

Dependence on AI & Loss of Human Skills

Artificial Intelligence (AI)

AI in Cybersecurity: Hacking & Cybercrime

Artificial Intelligence (AI)

Existential Risk & Superintelligent AI

Artificial Intelligence (AI)

AI Manipulation & Behavioral Control